When we build LLM applications, there is always a recurring question: “What vector store will we use?”. In Azure, there are several native solutions. Some of them were discussed in previous posts.

- Azure Cognitive Search: supports vector search but also hybrid search with semantic reranking as discussed here

- Azure Redis Cache Enterprise: check my blog post

- Azure Cosmos DB for MongoDB Core: see Microsoft Learn

In addition to the above, you can of course host your own vector database in a container such as Qdrant or others. You can install these on any service that supports containers such as App Service, Container Instances, Container Apps, or Kubernetes.

Using PostgreSQL

If you are familiar with Azure Database for PostgreSQL flexible servers, you can use it as a vector store, as long as you install the vector extension. This extension can be enabled in all compute tiers. I installed PostgreSQL and set the compute tier to Burstable with size Standard_B1ms (1 vCore, 2GB). This is great for testing and will cost around 20 euros per month with 32GB of storage. For production use, the monthly cost will start from about 150 euros at the lowest General Purpose tier.

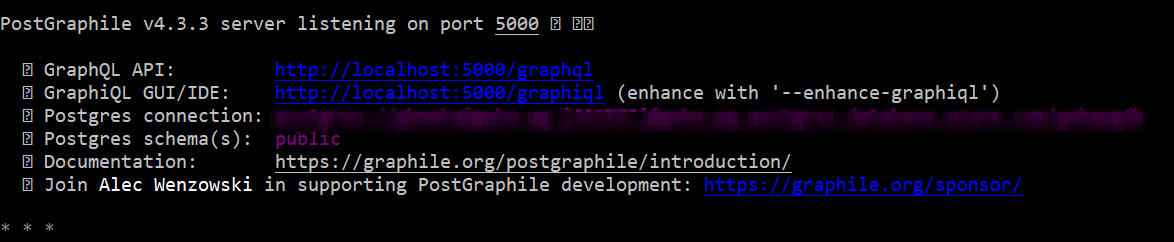

After deployment, you need to enable the vector extension. In Server Parameters, search for azure.extensions and select VECTOR from the list. Then click Save.

When done, grab the connection details from the Connect pane:

In pgAdmin, register the server with the above details. Connect to the server and create a database. Ensure you configure the firewall settings in Azure to allow your IP address to connect to the server.

Inside the database, go to Extensions and add the vector extension:

Note: in the code below, we will use LangChain. LangChain will try to enable the vector extension if it is not enabled

Note: if you do not want to install pgAdmin, you can create the database from the Azure Portal or use the Azure CLI.

Working with the vector store

Although you can create and query tables that contain vectors with plain SQL, we will use LangChain as a higher-level library that takes care of many of the details for us.

Take a look at the following Python code that creates a few embeddings (vectors) and then uses RAG (retrieval augmented generation) to answer a question with OpenAI’s text-davinci-003 model.

Note: the code is on Github as well

import os

import getpass

# read from .env file

from dotenv import load_dotenv

load_dotenv()

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import PGVector

from langchain.document_loaders import TextLoader

from langchain.chains import RetrievalQA

from langchain.llms import OpenAI

loader = TextLoader("./state_of_the_union.txt")

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

docs = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

pgpassword = os.getenv("PGPASSWORD", "")

if not pgpassword:

pgpassword = getpass.getpass("Enter pgpassword: ")

CONNECTION_STRING = f"postgresql+psycopg2://pgadmin:{pgpassword}@pg-vec-geba.postgres.database.azure.com:5432/pgvector"

COLLECTION_NAME = "state_of_the_union_test"

# if you run this code more than once, you will duplicated vectors

# no upserts

db = PGVector.from_documents(

embedding=embeddings,

documents=docs,

collection_name=COLLECTION_NAME,

connection_string=CONNECTION_STRING

)

retriever = db.as_retriever()

query = "What did the president say about Ketanji Brown Jackson"

# LLM will default to text-davinci-003 because we are using a completion endpoint

# versus a chat endpoint

qa = RetrievalQA.from_chain_type(llm=OpenAI(), chain_type="stuff", retriever=retriever)

answer = qa.run(query)

print(answer)

The code above requires a .env file with the following content:

OPENAI_API_KEY=OPENAI_API_KEY

PGPASSWORD=PASSWORD_TO_POSTGRES

You will also need the State of the Union text file from here.

Before running the code, install the following packages:

pip install pgvector

pip install openai

pip install psycopg2-binary

pip install tiktoken

The code does the following:

- Import required libraries: important here is the

PGVectorimport - Loads the text file and splits it into chunks: chunking strategy is not too important here; just use chunks of 1000 characters

- Create an instance of type

OpenAIEmbeddings, later used to create a vector per chunk for storage in PostgreSQL; it will also be used when doing queries with a retrieval QA chain (see below); usestext-embedding-ada-002embedding model - Construct the connection string for later use and set a collection name: collections are a way to store vectors together; the collections you create are kept in a table and each vector references the collection

- Create an instance of

PGVectorwithPGVector.from_documents: this will create/use tables to hold the collection(s) and vectors for you; all chunks will be vectorized and stored in a table; we will take a look at those tables in a moment; in a real application, you would reference existing tables and another process would create/update the vectors - Create a retriever (

qa) from thePGVectorinstance for use in a retrieval QA chain - Run a query and print the answer: the

qa.run (query)line does the n-nearest neighbor vector search in PostgreSQL (via the retriever), creates a meta-prompt with the relevant context, and returns the OpenAI model response in one step

In the PostgreSQL database, the above code creates two tables:

The collection table contains the collections you create from code. Each collection has a unique ID. The embedding table contains the vectors. Each vector has a unique ID and belongs to a collection. The fields of the embedding table are:

- uuid: unique ID of the vector

- collection_id: collection ID referencing the collection table

- embedding: a field of type vector that stores the embedding (1536 dimensions)

- document: the chunk of text that was vectorized

- cmetadata: a JSON field with a link to the source file

- custom_id: an id that is unique for each run

Note that when you run the sample Python code multiple times, you will have duplicated content. In a real application, you should avoid that. The process that creates and stores the vectors will typically be separate from the process that queries them.

⚠️ Important: Today, LangChain cannot search over all vectors in all collections. You always need to specify the collection to search. If you do need to search over all vectors, you can use SQL statements instead.

The search has the following properties:

- Distance strategy: cosine similarity; the pgvector extension also supports L2 distance and inner product; the code above uses the

text-embedding-ada-002embeddings model by default; with that model, you should use cosine similarity; LangChain uses cosine similarity as the default for PGVector so that’s a match! 👏 - Exact nearest neighbor search: although this provides perfect recall, it can get slow when there are many vectors because the entire table is scanned; the extension supports the creation of indexes to perform an approximate nearest neighbor search using IVFFLat or HNSW; see pgvector on GitHub for more details and also this article from Crunchy Data.

Note: most other vector databases use HNSW as the index type (e.g., Azure Cognitive Search, Qdrant, …); unlike IVFFLat you can create this index without having any vectors in your database table; at the time of writing (end of September 2023), the version of the vector extension on Azure was 0.4.1 and did not support HNSW; HNSW requires version 0.5.0 or higher

Conclusion

Azure Database for PostgreSQL with the vector extension is an interesting alternative to other vector database solutions in Azure. This is especially the case when PostgreSQL is your database of choice! In this post, we have shown how LangChain supports it with a simple example. If you do not use LangChain or other libraries, you can simply use SQL statements to create and search indexes as documented here.

The drawback of using PostgreSQL is that you need to know a bit more about exact and approximate nearest neighbor searches and the different index mechanisms. That’s actually a good thing if you want to create production applications with good performance. For a simple POC with not a lot of data, you can skip all of this and perform exact searches.

Besides the free tier of Azure Cognitive Search, the configuration above is the service with the lowest cost for POCs that need vector search. On top of that, the cheapest PostgreSQL option has more storage than Cognitive Search’s free tier (32GB vs. 50MB). Adding more storage is easy and relatively cheap as well. Give it a go and tell me what you think!