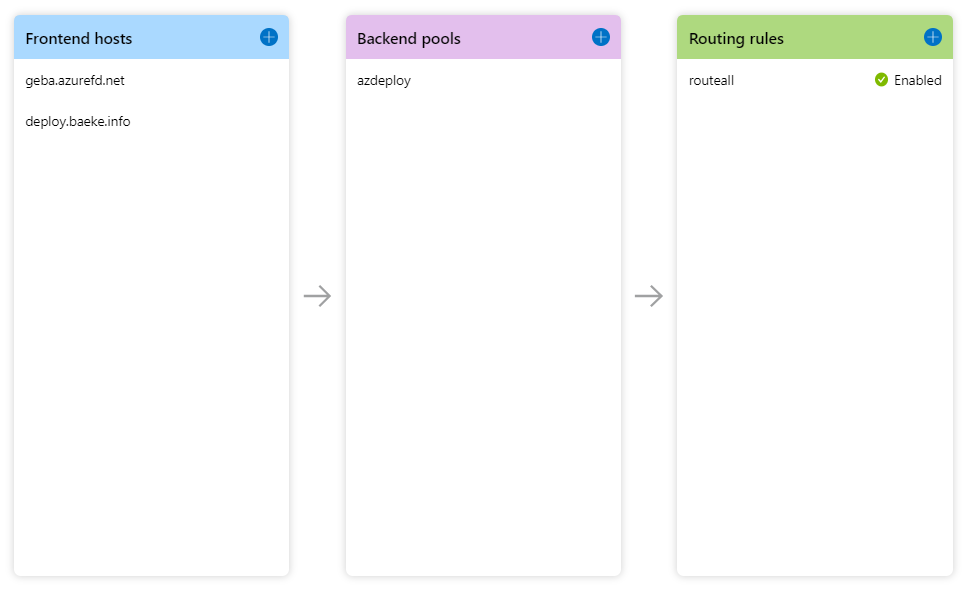

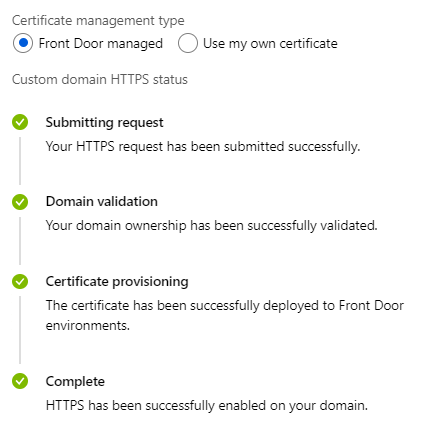

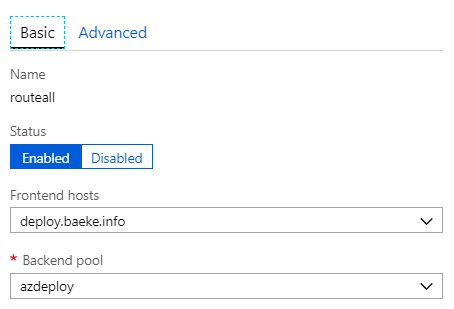

Adding Azure AD integration to a website is often confusing if you are just getting started. Let’s face it, not everybody has the opportunity to dig deep into such topics. For https://deploy.baeke.info, I wanted to enable Azure AD authentication so that only a select group of users in our AD tenant can call the back-end webhooks exposed by webhookd. The architecture of the application looks like this:

The process is as follows:

- Load the client from https://deploy.baeke.info

- Client obtains a token from Azure Active Directory; the user will have to authenticate; in our case that means that a second factor needs to be provided as well

- When the user performs an action that invokes a webhook, the call is sent to API Management

- API Management verifies the token and passes the request to webhookd over https with basic authentication

- The response is received by API Management which passes it unmodified to the client

I know you are an observing reader that is probably thinking: “why not present the token to webhookd?”. That’s possible but then I did not have a reason to use API Management! 😉

Before we begin you might want to get some background information about what we are going to do. Take a look at this excellent Youtube video that explains topics such a OAuth 2.0 and OpenID Connect in an easy to understand format:

Create an application in Azure AD

The first step is to create a new application registration. You can do this from https://aad.portal.azure.com. In Azure Active Directory, select App registrations or use the new App registrations (Preview) experience.

For single page applications (SPAs), the application type should be Web app / API. As the App ID URI and Home page URL, I used https://deploy.baeke.info.

In my app, a user will authenticate to Azure AD with a Login button. Clicking that button brings the user to a Microsoft hosted page that asks for credentials:

Naturally, this implies that the authentication process, when finished, needs to find its way back to the application. In that process, it will also bring along the obtained authentication token. To configure this, specify the Reply URLs. If you also develop on your local machine, include the local URL of the app as well:

For a SPA, you need to set an additional option in the application manifest (via the Manifest button):

"oauth2AllowImplicitFlow": true

This implicit flow is well explained in the above video and also here.

This is basically all you have to do for this specific application. In other cases, you might want to grant access from this application to other applications such as an API. Take a look at this post for more information about calling the Graph API or your own API.

We will just present the token obtained by the client to API Management. In turn, API Management will verify the token. If it does not pass the verification steps, a 401 error will be returned. We will look at API Management in a later post.

A bit of client code

Just browse to https://deploy.baeke.info and view the source. Authentication is performed with ADAL for Javascript. ADAL stands for the Active Directory Authentication Library. The library is loaded with from the CDN.

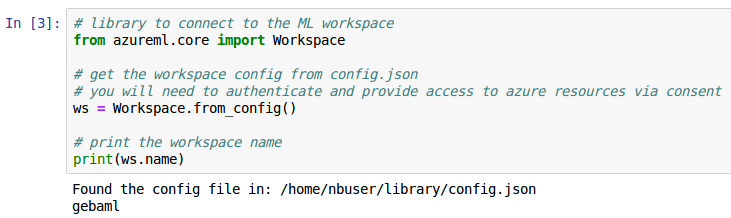

This is a simple Vue application so we have a Vue instance for data and methods. In that Vue instance data, authContext is setup via a call to new AuthenticationContext. The clientId is the Application ID of the registered app we created above:

authContext: new AuthenticationContext({

clientId: '1fc9093e-8a95-44f8-b524-45c5d460b0d8',

postLogoutRedirectUri: window.location

})

To authenticate, the Login button’s click handler calls authContext.login(). The login method uses a redirect. It is also possible to use a pop-up window by setting popUp: true in the object passed to new AuthenticationContext() above. Personally, I do not like that approach though.

In the created lifecycle hook of the Vue instance, there is some code that handles the callback. When not in the callback, getCachedUser() is used to check if the user is logged in. If she is, the token is obtained via acquireToken() and stored in the token variable of the Vue instance. The acquireToken() method allows the application to obtain tokens silently without prompting the user again. The first parameter of acquireToken is the same application ID of the registered app.

Note that the token (an ID token) is not encrypted. You can paste the token in https://jwt.ms and look inside. Here’s an example (click to navigate):

Calling the back-end API

In this application, the calls go to API Management. Here is an example of a call with axios:

axios.post('https://geba.azure-api.net/rg/create?rg=' + this.createrg.rg , null, this.getAxiosConfig(this.token))

.then(function(result) {

console.log("Got response...")

self.response = result.data;

})

.catch(function(error) {

console.log("Error calling webhook: " + error)

})

...

The third parameter is a call to getAxiosConfig that passes the token. getAxiosConfig uses the token to create the Authorization header:

getAxiosConfig: function(token) {

const config = {

headers: {

"authorization": "bearer " + token

}

}

return config

}

As discussed earlier, the call goes to API Management which will verify the token before allowing a call to webhookd.

Conclusion

With the source of https://deploy.baeke.info and this post, it should be fairly straightforward to enable Azure AD Authentication in a simple single page web application. Note that the code is kept as simple as possible and does not cover any edge cases. In a next post, we will take a look at API Management.