In this post, we will take a look at building an Azure AI Search index with a custom skill. We will use the Azure AI Search Python SDK to do the following:

- create a search index: a search index contains content to be searched

- create a data source: a datasource tells an Azure AI Search indexer where to get input data

- create a skillset: a skillset is a collection of skills that process the input data during the indexing process; you can use built-in skills but also build your own skills

- create an indexer: the indexer creates a search index from input data in the data source; it can transform the data with skills

If you are more into videos, I already created a video about this topic. In the video, I use the REST API to define the resources above. In this post, I will use the Python SDK.

What do we want to achieve?

We want to build an application that allows a user to search for images with text or a similar image like in the diagram below:

The application uses an Azure AI Search index to provide search results. An index is basically a collection of JSON documents that can be searched with various techniques.

The input data to create the index is just a bunch of .jpg files in Azure Blob Storage. The index will need fields to support the two different types of searches (text and image search):

- a text description of the image: we will need to generate the description from the image; we will use GPT-4 Vision to do so; the description supports keyword-based searches

- a text vector of the description: with text vectors, we can search for descriptions similar to the user’s query; it can provide better results than keyword-based searches alone

- an image vector of the image: with image vectors, we can supply an image and search for similar images in the index

I described building this application in a previous blog post. In that post, we pushed the index content to the index. In this post, we create an indexer that pulls in the data, potentially on a schedule. Using an indexer is recommended.

Creating the index

If you have an Azure subscription, first create an Azure AI Search resource. The code we write requires at least the basic tier.

Although you can create the index in the portal, we will create it using the Python SDK. At the time of writing (December 2023), you have to use a preview version of the SDK to support integrated vectorization. The notebook we use contains instructions about installing this version. The notebook is here: https://github.com/gbaeke/vision/blob/main/image_index/indexer-sdk.ipynb

The notebook starts with the necessary imports and also loads environment variables via a .env file. See the README of the repo to learn about the required variables.

To create the index, we define a blog_index function that returns an index definition. Here’s the start of the function:

def blog_index(name: str):

fields = [

SearchField(name="path", type=SearchFieldDataType.String, key=True),

SearchField(name="name", type=SearchFieldDataType.String),

SearchField(name="url", type=SearchFieldDataType.String),

SearchField(name="description", type=SearchFieldDataType.String),

SimpleField(name="enriched", type=SearchFieldDataType.String, searchable=False),

SearchField(

name="imageVector",

type=SearchFieldDataType.Collection(SearchFieldDataType.Single),

searchable=True,

vector_search_dimensions=1024,

vector_search_profile="myHnswProfile"

),

SearchField(

name="textVector",

type=SearchFieldDataType.Collection(SearchFieldDataType.Single),

searchable=True,

vector_search_dimensions=1536,

vector_search_profile="myHnswProfile"

),

]

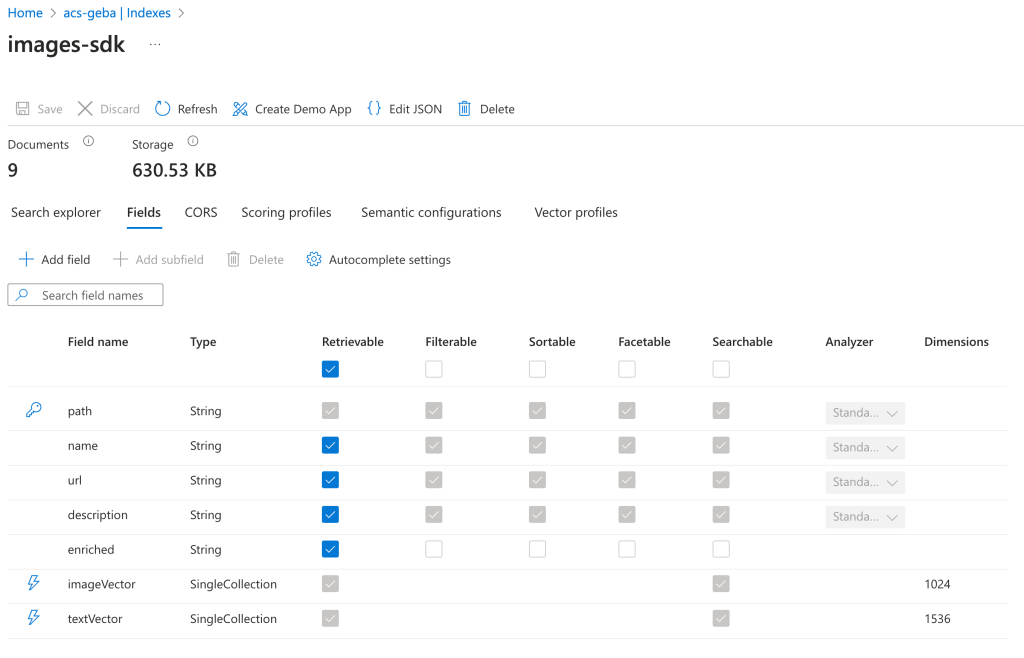

Above, we define an array of fields for the index. We will have 7 fields. The first three fields will be retrieved from blob storage metadata:

- path: base64-encoded url of the file; will be used as unique key

- name: name of the file

- url: full url of the file in Azure blob storage

The link between these fields and the metadata is defined in the indexer we will create later.

Next, we have the description field. We will generate the image description via GPT-4 Vision during indexing. The indexer will use a custom skill to do so.

The enriched field is there for debugging. It will show the enrichments by custom or built-in skills. You can remove that field if you wish.

To finish, we have vector fields. These fields are designed to hold arrays of a specific size:

- imageVector: a vector field that can hold 1024 values; the image vector model we use outputs 1024 dimensions

- textVector: a vector field that can hold 1536 values; the text vector model we use outputs that number of dimensions

Note that the vector fields references a search profile. We create that in the next block of code in the blog_index function:

vector_config = VectorSearch(

algorithms=[

HnswVectorSearchAlgorithmConfiguration(

name="myHnsw",

kind=VectorSearchAlgorithmKind.HNSW,

parameters=HnswParameters(

m=4,

ef_construction=400,

ef_search=500,

metric=VectorSearchAlgorithmMetric.COSINE,

),

),

ExhaustiveKnnVectorSearchAlgorithmConfiguration(

name="myExhaustiveKnn",

kind=VectorSearchAlgorithmKind.EXHAUSTIVE_KNN,

parameters=ExhaustiveKnnParameters(

metric=VectorSearchAlgorithmMetric.COSINE,

),

),

],

profiles=[

VectorSearchProfile(

name="myHnswProfile",

algorithm="myHnsw",

vectorizer="myOpenAI",

),

VectorSearchProfile(

name="myExhaustiveKnnProfile",

algorithm="myExhaustiveKnn",

vectorizer="myOpenAI",

),

],

vectorizers=[

AzureOpenAIVectorizer(

name="myOpenAI",

kind="azureOpenAI",

azure_open_ai_parameters=AzureOpenAIParameters(

resource_uri="AZURE_OPEN_AI_RESOURCE",

deployment_id="EMBEDDING_MODEL_NAME",

api_key=os.getenv('AZURE_OPENAI_KEY'),

),

),

],

)

Above, vector_config is an instance of the VectorSearch object, which holds algorithms, profiles and vectorizers:

- algorithms: Azure AI search supports both HNSW and exhaustive to search for nearest neighbors to an input vector; above, both algorithms are defined; they both use cosine similarity as the distance metric

- vectorizers: this defines the integrated vectorizer and points to an Azure OpenAI resource and embedding model. You need to deploy that model in Azure OpenAI and give it a name; at the time of writing (December 2023), this feature was in public preview

- profiles: a profile combines an algorithm and a vectorizer; we create two profiles, one for each algorithm; the vector fields use the myHnswProfile profile.

Note: using HNSW on a vector field, designed to perform approximate nearest neighbor searches, still allows you to do an exhaustive search; the notebook contains sample searches at the bottom, which use exhaustive searches to search the entire vector space; note that the reverse is not possible (using HNSW when index on field is set as exhaustive).

We finish the function with the code below:

semantic_config = SemanticConfiguration(

name="my-semantic-config",

prioritized_fields=PrioritizedFields(

prioritized_content_fields=[SemanticField(field_name="description")]

),

)

semantic_settings = SemanticSettings(configurations=[semantic_config])

return SearchIndex(name=name, fields=fields, vector_search=vector_config, semantic_settings=semantic_settings)

Above, we specify a semantic_config. It is used to inform the semantic reranker abiut the fields in our index with valuable data. Here, we use the description field. The config is used to create an instance of type Semantic_Settings. You also have to enable the semantic reranker in Azure AI Search to enable this feature.

The function ends by returning an instance of type SearchIndex, which contains the fields array, the vector configuration and the semantic configuration.

Now we can use the output of this function to create the index:

service_endpoint = "https://acs-geba.search.windows.net"

index_name = "images-sdk"

key = os.getenv("AZURE_AI_SEARCH_KEY")

index_client = SearchIndexClient(service_endpoint, AzureKeyCredential(key))

search_client = SearchClient(service_endpoint, index_name, AzureKeyCredential(key))

index = blog_index(index_name)

# create the index

try:

index_client.create_or_update_index(index)

print("Index created or updated successfully")

except Exception as e:

print("Index creation error", e)

The important part here is the creation of a SearchIndexClient that authenticates to our Azure AI Search resource. We use that client to create_or_update our index. That function requires a SearchIndex parameter, provided by the blog_index function.

When that call succeeds, you should see the index in the portal. Text and vector fields are searchable.

The vector profiles should be present:

Click on an algorithm or vectorizer. It should match the definition in our code.

Now we can define the data source, skillset and indexer.

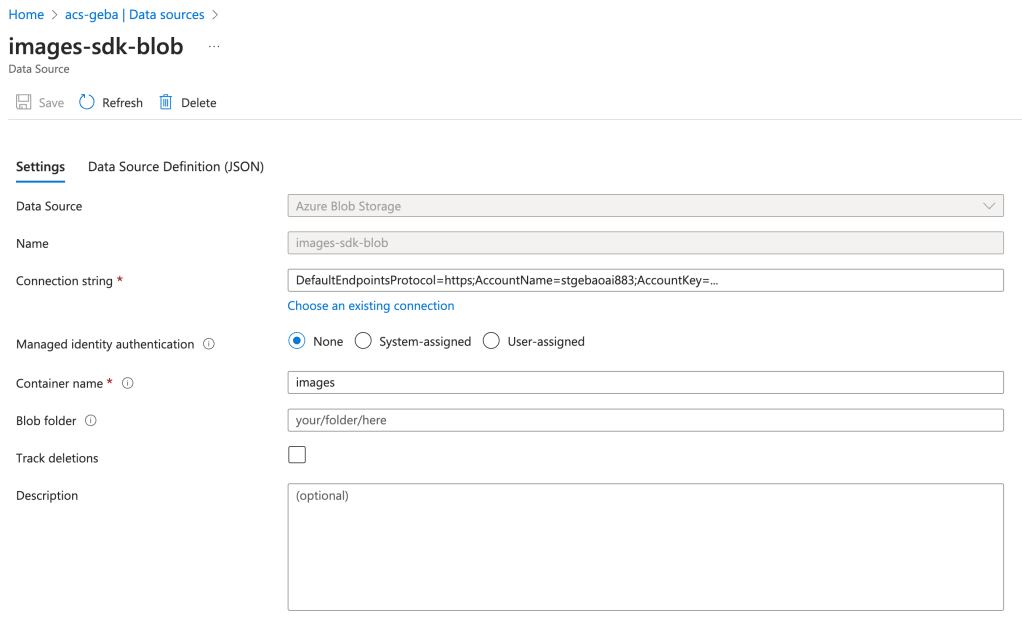

Data source

Our images are stored in Azure Blob Storage. The data source needs to point to that resource and specify a container. We can use the following code:

# Create a data source

ds_client = SearchIndexerClient(service_endpoint, AzureKeyCredential(key))

container = SearchIndexerDataContainer(name="images")

data_source_connection = SearchIndexerDataSourceConnection(

name=f"{index_name}-blob",

type="azureblob",

connection_string=os.getenv("STORAGE_CONNNECTION_STRING"),

container=container

)

data_source = ds_client.create_or_update_data_source_connection(data_source_connection)

print(f"Data source '{data_source.name}' created or updated")

The code is pretty self-explanatory. The data source is shown in the portal as below:

Skillset with two skills

Before we create the indexer, we define a skillset with two skills:

- AzureOpenAIEmbeddingSkill: a built-in skill that uses an Azure OpenAI embedding model and takes text as input; it returns a vector (embedding) of 1536 dimensions; this skill is not free; you will be billed for the vectors you create via your Azure OpenAI resource

- WebApiSkill: a custom skill that points to an endpoint that you need to build and host; you define the inputs and outputs of the custom skill; my custom skill runs in Azure Container Apps but it can run anywhere. Often, skills are implemented as an Azure Function.

The code starts as follows:

skillset_name = f"{index_name}-skillset"

embedding_skill = AzureOpenAIEmbeddingSkill(

description="Skill to generate embeddings via Azure OpenAI",

context="/document",

resource_uri="https://OPEN_AI_RESOURCE.openai.azure.com",

deployment_id="DEPLOYMENT_NAME_OF_EMBEDDING MODEL",

api_key=os.getenv('AZURE_OPENAI_KEY'),

inputs=[

InputFieldMappingEntry(name="text", source="/document/description"),

],

outputs=[

OutputFieldMappingEntry(name="embedding", target_name="textVector")

],

)

Above, we define the skillset and the embedding_skill. The AzureOpenAIEmbeddingSkill points to a deployed text-embedding-ada-002 embedding model. Use the name of your deployment, not the model name.

A skillset operates within a context. The context above is the entire document (/document) but that’s not necessarily the case for other skills. The input to the embedding skill is our description field (/document/description). The output will be a vector. The target_name above is some sort of a temporary name used during the so-called enrichment process of the indexer. We will need to configure the indexer to write this field to the index.

The question is: “Where does the description come from?”. The description comes from the WebApiSkill. Because the embedding skill needs the description field generated by the WebApiSkill, the WebApiSkill will run first. Here is the custom web api skill:

custom_skill = WebApiSkill(

description="A custom skill that creates an image vector and description",

uri="YOUR_ENDPOINT",

http_method="POST",

timeout="PT60S",

batch_size=4,

degree_of_parallelism=4,

context="/document",

inputs=[

InputFieldMappingEntry(name="url", source="/document/url"),

],

outputs=[

OutputFieldMappingEntry(name="embedding", target_name="imageVector"),

OutputFieldMappingEntry(name="description", target_name="description"),

],

)

The input to the custom skill is the url to our image. That url is posted to the endpoint you define in the uri field. You can control how many inputs are sent in one batch and how many batches are sent concurrently. The inputs have to be sent in a specific format.

This skill also operates at the document level and creates two new fields. The contents of those fields are generated by your custom endpoint and returned as embedding and description. They are mapped to imageVector and description. Again, those fields are temporary and need to be written to the index by the indexer.

To see the code of the custom skill, check https://github.com/gbaeke/vision/tree/main/img_vector_skill. That skill is written for demo purposes and was not thoroughly vetted to be used in production. Use at your own risk. In addition, GPT-4 Vision requires an OpenAI key (not Azure OpenAI) and currently (December 2023) allows 100 calls per day! You currently cannot use this at scale. Azure also provides image captioning models that might fit the purpose.

Now we can create the skillset:

skillset = SearchIndexerSkillset(

name=skillset_name,

description="Skillset to generate embeddings",

skills=[embedding_skill, custom_skill],

)

client = SearchIndexerClient(service_endpoint, AzureKeyCredential(key))

client.create_or_update_skillset(skillset)

print(f"Skillset '{skillset.name}' created or updated")

The above code results in the following:

Indexer

The indexer is the final piece of the puzzle and brings the data source, index and skillset together:

indexer_name = f"{index_name}-indexer"

indexer = SearchIndexer(

name=indexer_name,

description="Indexer to index documents and generate description and embeddings",

skillset_name=skillset_name,

target_index_name=index_name,

parameters=IndexingParameters(

max_failed_items=-1

),

data_source_name=data_source.name,

# Map the metadata_storage_name field to the title field in the index to display the PDF title in the search results

field_mappings=[

FieldMapping(source_field_name="metadata_storage_path", target_field_name="path",

mapping_function=FieldMappingFunction(name="base64Encode")),

FieldMapping(source_field_name="metadata_storage_name", target_field_name="name"),

FieldMapping(source_field_name="metadata_storage_path", target_field_name="url"),

],

output_field_mappings=[

FieldMapping(source_field_name="/document/textVector", target_field_name="textVector"),

FieldMapping(source_field_name="/document/imageVector", target_field_name="imageVector"),

FieldMapping(source_field_name="/document/description", target_field_name="description"),

],

)

indexer_client = SearchIndexerClient(service_endpoint, AzureKeyCredential(key))

indexer_result = indexer_client.create_or_update_indexer(indexer)

Above, we create an instance of type SearchIndexer and set the indexer’s name, the data source name, the skillset name and the target index.

The most important parts are the field mappings and the output field mappings.

Field mappings take data from the indexer’s data source and map them to a field in the index. In our case, that’s content and metadata from Azure Blob Storage. The metadata fields in the code above are described in the documentation. In a field mapping, you can configure a mapping function. We use the base64Encode mapping function for the path field.

Output field mappings take new fields created during the enrichment process and map them to fields in the index. You can see that the fields created by the skills are mapped to fields in the index. Without these mappings, the skillsets would generate the data internally but the data would never appear in the index.

Once the indexer is defined, it gets created (or updated) using an instance of type SearchIndexerClient.

Note that we set a parameter in the index, max_failed_items, to -1. This means that the indexer process keeps going, no matter how many errors it produces. In the indexer screen below, you can see there was one error:

The error happened because the image vectorizer in the custom web skill threw an error on one of the images.

Using an indexer has several advantages:

- Indexing is a background process and can run on a schedule; there is no need to schedule your own indexing process

- Indexers keep track of what they indexed and can index only new data; with your own code, you have to maintain that state; failed documents like above are not reprocessed

- Depending on the source, indexers see deletions and will remove entries from the index

- Indexers can be easily reset to trigger a full index

- Indexing errors are reported and errors can be sent to a debugger to inspect what went wrong

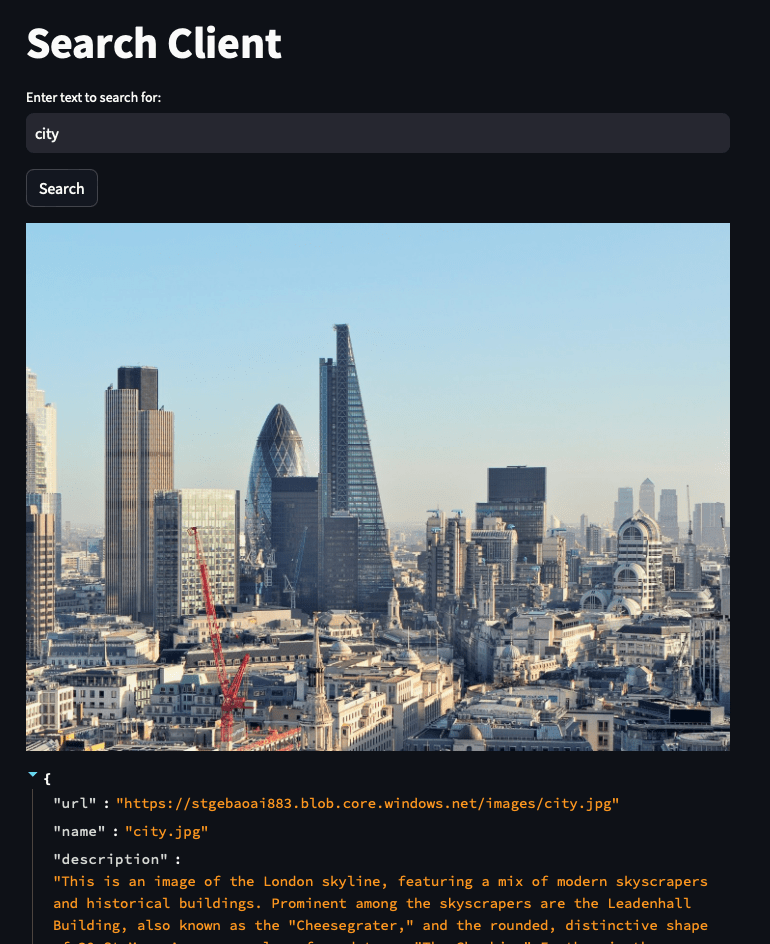

Testing the index

We can test the index by performing a text-based search that uses the integrated vectorizer:

# Pure Vector Search

query = "city"

search_client = SearchClient(service_endpoint, index_name, credential=AzureKeyCredential(key))

vector_query = VectorizableTextQuery(text=query, k=1, fields="textVector", exhaustive=True)

results = search_client.search(

search_text=None,

vector_queries= [vector_query],

select=["name", "description", "url"],

top=1

)

# print selected fields from dictionary

for result in results:

print(result["name"])

print(result["description"])

print(result["url"])

print("")

Above, we search for city (in the query variable). The VectorizableTextQuery class (in preview) takes the plain text in the query variable and vectorizes it for us with the embedding model defined in the integrated vectorizer. In addition, we specify how many results to return (1 nearest neighbors) and that we want to search all vectors (exhaustive).

Note: remember that the vector field was configured for HNSW; we can switch to exhaustive as shown above

Next, search_client.search performs the actual search. It only provides the vector query, which results in a pure similarity search with the query vector. search_text is set to None. Set search string to the query if you want to do a hybrid search. The notebook contains additional examples that also does a keyword and semantic search with highlighting.

The search gives the following result (selected fields: name, description, url):

city.jpg

This is an image of the London skyline, featuring a mix of modern skyscrapers and historical buildings. Prominent among the skyscrapers are the Leadenhall Building, also known as the "Cheesegrater," and the rounded, distinctive shape of 30 St Mary Axe, commonly referred to as "The Gherkin." Further in the background, the towers of Canary Wharf can be seen. The view is clear and taken on a day with excellent visibility.

https://stgebaoai883.blob.core.windows.net/images/city.jpg

The image the URL points to is:

In the repo’s search-client folder, you can find a Streamlit app to search for and display images and dump the entire search result object. Make sure you install all the packages in requirements.txt and the preview Azure AI Search package from the whl folder. Simply type streamlit run app.py to run the app:

Conclusion

In this post, we demonstrated the use of the Azure AI Search Python SDK to create an indexer that takes images as input, create new fields with skills, and write those fields + metadata to an index.

We touched on the advantages of using an indexer versus your own indexing code (pull versus push).

With this code and some sample images, you should be able to build an image search application yourself.