Introduction

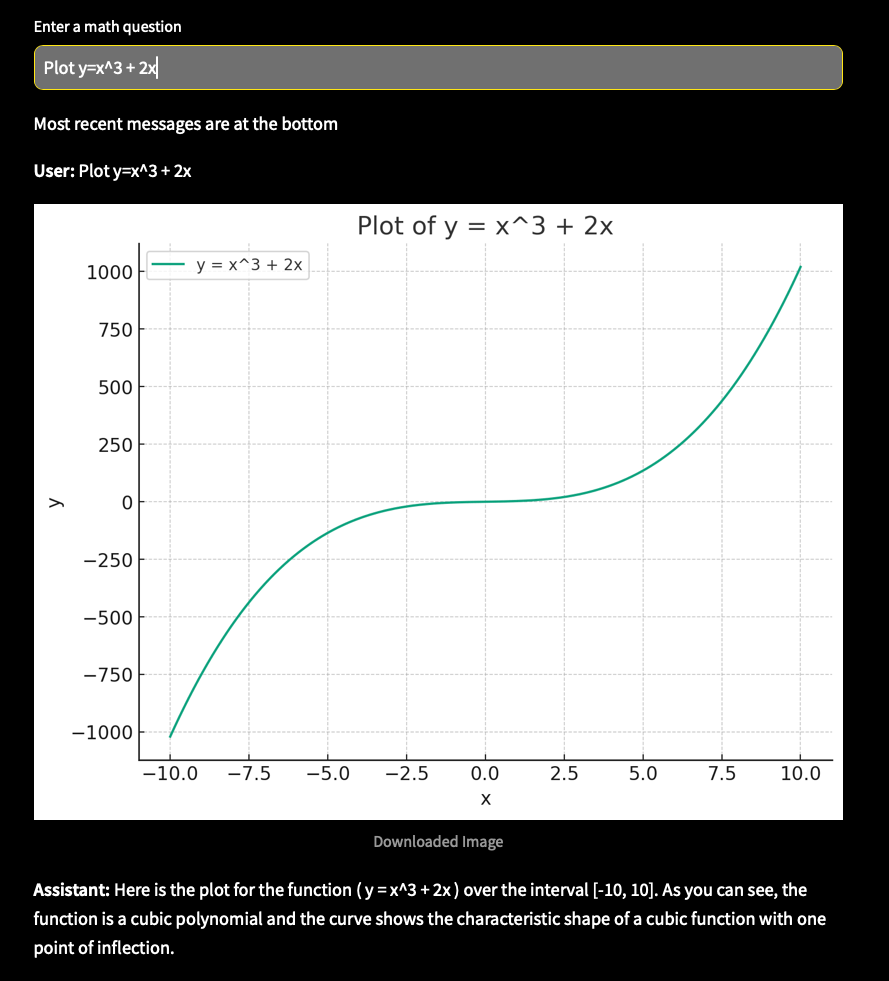

In a previous blog post, I wrote an introduction about the Azure OpenAI Assistants API. As an example, I created an assistant that had access to the Code Interpreter tool. You can find the code here.

In this post, we will provide the assistant with custom tools. These custom tools use the function calling features of more recent GPT models. As a result, these custom tools are called functions in the Assistants API. What’s in a name right?

There are a couple of steps you need to take for this to work:

- Create an assistant and give it a name and instructions.

- Define one or more functions in the assistant. Functions are defined in JSON. You need to provide good descriptions for the function and all of its parameters.

- In your code, detect when the model chooses one or more functions that should be executed.

- Execute the functions and pass the results to the model to get a final response that uses the function results.

From the above, it should be clear that the model, gpt-3.5-turbo or gpt-4, does not call your code. It merely proposes functions and their parameters in response to a user question.

For instance, if the user asks “Turn on the light in the living room”, the model will check if there is a function that can do that. If there is, it might propose to call function set-lamp with parameters such as the lamp name and maybe a state like true or false. This is illustrated in the diagram below when the call to the function succeeds.

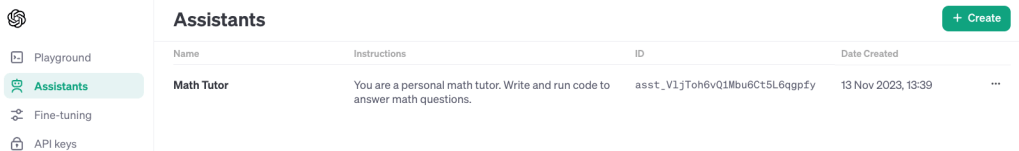

Creating the assistant in Azure OpenAI Playground

Unlike the previous post, the assistant will be created in Azure OpenAI Playground. Our code will then use the assistant using its unique identifier. In the Azure OpenAI Playground, the Assistant looks like below:

Let’s discuss the numbers in the diagram:

- Once you save the assistant, you get its ID. The ID will be used in our code later

- Assistant name

- Assistant instructions: description of what the assistant can do, that it has functions, and how it should behave; you will probably need to experiment with this to let the assistant do exactly what you want

- Two function definitions:

set_lampandset_lamp_brightness - You can test the functions in the chat panel. When the assistant detects that a function needs to be called, it will propose the function and its parameters and ask you to provide a result. The result you type is then used to formulate a response like The living room lamp has been turned on.

Let’s take a look at the function definition for set_lamp:

{

"name": "set_lamp",

"description": "Turn lamp on or off",

"parameters": {

"type": "object",

"properties": {

"lamp": {

"type": "string",

"description": "Name of the lamp"

},

"state": {

"type": "boolean"

}

},

"required": [

"lamp",

"state"

]

}

}

The other function is similar but the second parameter is an integer between 0 and 100. When you notice your function does not get called, or the parameters are wrong, you should try to improve the description of both the function and each of the parameters. The underlying GPT model uses these descriptions to try and match a user question to one or more functions.

Let’s look at some code. See https://github.com/gbaeke/azure-assistants-api/blob/main/func.ipynb for the example notebook.

Using the assistant from your code

We start with an Azure OpenAI client, as discussed in the previous post.

import os

from dotenv import load_dotenv

from openai import AzureOpenAI

load_dotenv()

# Create Azure OpenAI client

client = AzureOpenAI(

api_key=os.getenv('AZURE_OPENAI_API_KEY'),

azure_endpoint=os.getenv('AZURE_OPENAI_ENDPOINT'),

api_version=os.getenv('AZURE_OPENAI_API_VERSION')

)

# assistant ID as created in the portal

assistant_id = "YOUR ASSISTANT ID"

Creating a thread and adding a message

We will add the following message to a new thread: “Turn living room lamp and kitchen lamp on. Set both lamps to half brightness.“.

The model should propose multiple functions to be called in a certain order. The expected order is:

- turn on living room lamp

- turn on kitchen lamp

- set living room brightness to 50

- set kitchen brightness to 50

# Create a thread

thread = client.beta.threads.create()

import time

from IPython.display import clear_output

# function returns the run when status is no longer queued or in_progress

def wait_for_run(run, thread_id):

while run.status == 'queued' or run.status == 'in_progress':

run = client.beta.threads.runs.retrieve(

thread_id=thread_id,

run_id=run.id

)

time.sleep(0.5)

return run

# create a message

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="Turn living room lamp and kitchen lamp on. Set both lamps to half brightness."

)

# create a run

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant_id # use the assistant id defined in the first cell

)

# wait for the run to complete

run = wait_for_run(run, thread.id)

# show information about the run

# should indicate that run status is requires_action

# should contain information about the tools to call

print(run.model_dump_json(indent=2))

After creating the thread and adding a message, we use a slightly different approach to check the status of the run. The wait_for_run function keeps running as long as the status is either queued or in_progress. When it is not, the run is returned. When we are done waiting, we dump the run as JSON.

Here is where it gets interesting. A run has many properties like created_at, model and more. I our case, we expect a response that indicates we need to take action by running one or more functions. This is indicated by the presence of the required_action property. It actually will ask for tool outputs and will present a list of tool calls to perform (tool, function, whatever… 😀). Here’s a JSON snippet as part of the run JSON dump:

"required_action": {

"submit_tool_outputs": {

"tool_calls": [

{

"id": "call_2MhF7oRsIIh3CpLjM7RAuIBA",

"function": {

"arguments": "{\"lamp\": \"living room\", \"state\": true}",

"name": "set_lamp"

},

"type": "function"

},

{

"id": "call_SWvFSPllcmVv1ozwRz7mDAD6",

"function": {

"arguments": "{\"lamp\": \"kitchen\", \"state\": true}",

"name": "set_lamp"

},

"type": "function"

}, ... more function calls follow...

Above it’s clear that the assistant wants you to submit a tool output for multiple functions. Only the first two are shown:

- Function

set_lampwith arguments for lamp and state as “living room” and ‘true” - Function

set_lampwith arguments for lamp and state as “kitchen” and ‘true” - The other two functions propose

set_lamp_brightnessfor both lamps with brightness set to 50

Defining the functions

Our code will need some real functions to call that actually do something. In this example, we use these two dummy functions. In reality, you could integrate this with Hue or other smart lighting. In fact, I have something like that: https://github.com/gbaeke/openai_assistant.

Here are the dummy functions:

make_error = False

def set_lamp(lamp="", state=True):

if make_error:

return "An error occurred"

return f"The {lamp} is {'on' if state else 'off'}"

def set_lamp_brightness(lamp="", brightness=100):

if make_error:

return "An error occurred"

return f"The brightness of the {lamp} is set to {brightness}"

The functions should return a string that the model can interpret. Be as concise as possible to save tokens…💰

Doing the tool/function calls

In the next code block, we check if the run requires action, get the tool calls we need to do and then iterate through the tool_calls array. At each iteration we check the function name, call the function and add the result to a results array. The results array is then passed to the model. Check out the code below and its comments:

import json

# we only check for required_action here

# required action means we need to call a tool

if run.required_action:

# get tool calls and print them

# check the output to see what tools_calls contains

tool_calls = run.required_action.submit_tool_outputs.tool_calls

print("Tool calls:", tool_calls)

# we might need to call multiple tools

# the assistant API supports parallel tool calls

# we account for this here although we only have one tool call

tool_outputs = []

for tool_call in tool_calls:

func_name = tool_call.function.name

arguments = json.loads(tool_call.function.arguments)

# call the function with the arguments provided by the assistant

if func_name == "set_lamp":

result = set_lamp(**arguments)

elif func_name == "set_lamp_brightness":

result = set_lamp_brightness(**arguments)

# append the results to the tool_outputs list

# you need to specify the tool_call_id so the assistant knows which tool call the output belongs to

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": json.dumps(result)

})

# now that we have the tool call outputs, pass them to the assistant

run = client.beta.threads.runs.submit_tool_outputs(

thread_id=thread.id,

run_id=run.id,

tool_outputs=tool_outputs

)

print("Tool outputs submitted")

# now we wait for the run again

run = wait_for_run(run, thread.id)

else:

print("No tool calls identified\n")

# show information about the run

print("Run information:")

print("----------------")

print(run.model_dump_json(indent=2), "\n")

# now print all messages in the thread

print("Messages in the thread:")

print("-----------------------")

messages = client.beta.threads.messages.list(thread_id=thread.id)

print(messages.model_dump_json(indent=2))

At the end, we dump both the run and the messages JSON. The messages should indicate some final response from the model. To print the messages in a nicer way, you can use the following code:

import json

messages_json = json.loads(messages.model_dump_json())

def role_icon(role):

if role == "user":

return "👤"

elif role == "assistant":

return "🤖"

for item in reversed(messages_json['data']):

# Check the content array

for content in reversed(item['content']):

# If there is text in the content array, print it

if 'text' in content:

print(role_icon(item["role"]),content['text']['value'], "\n")

# If there is an image_file in the content, print the file_id

if 'image_file' in content:

print("Image ID:" , content['image_file']['file_id'], "\n")

In my case, the output was as follows:

I set make_error to True. In that case, the tool responses indicate an error at every call. The model reports that back to the user.

What makes this unique?

Function calling is not unique to the Assistants API. Function calling is a feature of more recent GPT models, to allow those models to propose one or more function to call from your code. You can simply use the Chat Completion API to pass in your function descriptions in JSON.

If you use frameworks like Semantic Kernel or LangChain, you can use function calling with the abstractions that they provide. In most cases that means you do not have to create the function JSON description. Instead, you just write your functions in native code and annotate them as a tool or make them part of a plugin. You can then pass a list of tools to an agent or plugins to a kernel and you’re done! In fact, LangChain (and soon Semantic Kernel) already supports the Assistant API.

One of the advantages that the Assistants API has, is the ability to define all your functions within the assistant. You can do that with code but also via the portal. The Assistants API also makes it a bit simpler to process the tool responses although the difference is not massive.

Being able to test your functions in the Assistant Playground is a big benefit as well.

Conclusion

Function calling in the Assistants API is not very different from function calling in the Chat Completion API. It’s nice you can create and update your function definitions in the portal and directly try them in the chat panel. Working with the tool calls and tool responses is also a bit easier.