This post is part of a series of blog posts about the Azure OpenAI Assistants API. Here are the previous posts:

- Part 1: introduction

- Part 2: using tools

- Part 3: retrieval

In all of those posts, we demonstrated the abilities of the Azure OpenAI Assistants API in a Python notebook. In this post, we will build an actual chat application with some help of the Bot Framework SDK.

The Bot Framework SDK is a collection of libraries and tools that let you build, test and deploy bot applications. The target audience is developers. They can write the bot in C#, TypeScript or Python. If you are more of a Power Platform user/developer, you can also use Copilot Studio. I will look at the Assistants API and Copilot Studio in a later post.

The end result after reading this post is a bot you can test with the Bot Framework Emulator. You can download the emulator for your platform here.

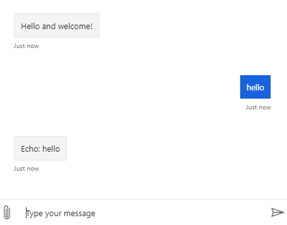

When you run the sample code from GitHub and connect the emulator to the bot running on you local machine, you get something like below:

Writing a basic bot

You can follow the Create a basic bot quickstart on Microsoft Learn to get started. It’s a good quickstart and it is easy to follow.

On that page, switch to Python and simply follow the instructions. The end-to-end sample I provide is in Python so using that language will make things easier. At the end of the quickstart, you will have a bot you can start with python app.py. The post also tells you how to connect the Bot Framework Emulator to your bot that runs locally on your machine. The quickstart bot is an echo bot that simply echoes the text you type:

A quick look at the bot code

If you check the bot code in bot.py, you will see two functions:

on_members_added_activity: do something when a new chat starts; we can use this to start a new assistant threadon_message_activity: react to a user sending a message; here, we can add the message to a thread, run it, and send the response back to the user

👉 This code uses a tiny fraction of features of the Bot Framework SDK. There’s a huge list of capabilities. Check the How-To for developers, which starts with the basics of sending and receiving messages.

Below is a diagram of the chat and assistant flow:

In the diagram, the initial connection triggers on_members_added_activity. Let’s take a look at it:

async def on_members_added_activity(

self,

members_added: ChannelAccount,

turn_context: TurnContext

):

for member_added in members_added:

if member_added.id != turn_context.activity.recipient.id:

# Create a new thread

self.thread_id = assistant.create_thread()

await turn_context.send_activity("Hello. Thread id is: " + self.thread_id)

The function was modified to create a thread and store the thread.id as a property thread_id of the MyBot class. The function create_thread() comes from a module called assistant.py, which I added to the folder that contains bot.py:

def create_thread():

thread = client.beta.threads.create()

return thread.id

Easy enough, right?

The second function, on_message_activity, is used to respond to new chat messages. That’s number 2 in the diagram above.

async def on_message_activity(self, turn_context: TurnContext):

# add message to thread

run = assistant.send_message(self.thread_id, turn_context.activity.text)

if run is None:

print("Result of send_message is None")

tool_check = assistant.check_for_tools(run, self.thread_id)

if tool_check:

print("Tools ran...")

else:

print("No tools ran...")

message = assistant.return_message(self.thread_id)

await turn_context.send_activity(message)

Here, we use a few helper methods. It could actually be one function but I decided to break them up somewhat:

send_message: add a message to the thread created earlier; we grab the text the user entered in the chat viaturn_context.activity.textcheck_for_tools: check if we need to run a tool (function) likehr_searchorrequest_raiseand add tool results to the messagesreturn_message: return the last message from the messages array and send it back to the chat viaturn_context.send_activity; that’s number 5 in the diagram

💡 The stateful nature of the Azure OpenAI Assistants API is of great help here. Without it, we would need to use the Chat Completions API and find a way to manage the chat history ourselves. There are various ways to do that but not having to do that is easier!

A look at assistant.py

Check assistant.py on GitHub for the details. It contains the helper functions called from on_message_activity.

In assistant.py, the following happens:

- Load environment variables from

../../.env - Initialise the AzureOpenAI client

- Use a hardcoded assistant ID; see https://atomic-temporary-16150886.wpcomstaging.com/2024/02/10/retrieval-with-the-azure-openai-assistants-api/ for more information

- Load and split the PDF file

- Create a Chroma in-memory vector database

- Define a helper function to query the Chroma database

If you have read the previous blog post on retrieval, you should already be familiar with all of the above.

What’s new are the assistant helper functions that get called from the bot.

create_thread: creates a thread and returns the thread idwait_for_run: waits for a thread run to complete and returns the run; used internally; never gets called from the bot codecheck_for_tools: checks a run forrequired_action, performs the actions by running the functions and returning the results to the assistant API; we have two functions:hr_queryandrequest_raise.send_message: sends a message to the assistant picked up from the botreturn_message: picks the latest message from the messages in a thread and returns it to the bot

To get started, this is relatively easy. However, building a chat bot that does exactly what you want and refuses to do what you don’t want is not particularly easy.

Should you do this?

Combining the Bot Framework SDK with OpenAI is a well-established practice. You get the advantages of building enterprise-ready bots with the excellent conversational capabilities of LLMs. At the moment, production bots use the OpenAI chat completions API. Due to the stateless nature of that API you need to maintain the chat history and send it to the API to make it aware of the conversation so far.

As already discussed, the Assistants API is stateful. That makes it very easy to send a message and get the response. The API takes care of chat history management.

As long as the Assistants API does not offer ways to control the chat history by limiting the amount of interactions or summarising the conversation, I would not use this API in production. It’s not recommended to do that anyway because it is in preview (February 2024).

However, as soon as the API is generally available and offers chat history control, using it with the Bot Framework SDK, in my opinion, is the way to go.

For now, as a workaround, you could limit the number of interactions and present a button to start a new thread if the user wants to continue. Chat history is lost at that moment but at least the user will be aware of it.

Conclusion

The OpenAI Assistants API and the Bot Framework SDK are a great match to create chat bots that feel much more natural than with the Bot Framework SDK on its own. The statefulness of the assistants API makes it easier than the chat completions API.

This post did not discuss the ability to connect Bot Framework bots with an Azure Bot Service. Doing so makes it easy to add your bot to multiple channels such as Teams, SMS, a web chat control and much more. We’ll keep that for another post. Maybe! 😀